The Rise of Codex

About a month ago, GPT‑5 was released, transforming Codex CLI from an underused tool into one that could compete directly with Claude Code. Until then, Claude Code (from Anthropic) dominated the TUI Agent space.

Now we are seeing Codex 10x usage in the past 2 weeks and the cli finally cracking 100k weekly npm downloads.

A Tale of Two Agents

Here's a quick intro to the two main coding agents:

-

Claude Code is Anthropic's terminal-based coding agent that revitalized TUIs and continuously advances coding agent capabilities with features like subagents, slash commands, status lines, and long-running background tasks. It's proven that terminal-based coding agents can handle more than just code.

-

Codex refers to several OpenAI tools: a CLI, web-based agent, and VS Code extension. I'm focusing on the VS Code extension and CLI, both powered by GPT‑5 and available directly in your editor or terminal for various coding tasks.

GPT‑5: Not Superintelligence, But a Good Coder

Given the hype around GPT‑5, people expected a revolutionary AI breakthrough. While it didn't deliver that, it has surprised many with its solid coding performance when integrated into the right tools.

In a recent video, I tested Claude Code (Opus 4) and Codex (GPT‑5) on identical coding tasks. They finished neck-and-neck with nearly identical solution quality. Codex wasn't just "good enough", it matched Anthropic's flagship performance.

Others report similar results. Ian Nuttall's comparison shows Codex competing well in real-world scenarios. Some peers note that Codex occasionally catches bugs Claude Code misses, especially with clear instructions.

Pricing Tells the Story

Cost drives Codex's adoption surge. Codex is included in the $20 ChatGPT Plus plan, and I've used it exclusively since GPT‑5 launched without hitting rate limits.

Claude Code is more restrictive:

- The $20 plan limits you to Sonnet 4, which often times out after ~30 minutes

- Even the $100 tier with Opus 4 has tighter usage caps than expected. You need the $200/month plan to feel truly unleashed

For all-day coding agent reliability, Codex is the dependable choice.

The raw token pricing comparison is stark:

| Model | Input | Output |

|---|---|---|

| GPT‑5 (Codex) | $1.25 | $10 |

| Opus 4 (Claude Code) | $15 | $75 |

GPT‑5 is 12× cheaper on input and 7.5× cheaper on output.

Prompting Style Matters

Prompting style also drives Codex's growth. GPT‑5 handles instructions more directly and literally, while Opus 4 and Sonnet 4 tend toward interpretation and verbosity.

This directness becomes a strength as users learn effective GPT‑5 prompting. Once you find its rhythm, Codex can feel equally capable, or sometimes superior, for daily coding tasks.

This, in my mind, is why it has taken people a while to figure out how to use Codex effectively and there has been a vibe shift from the initial backlash from GPT-5.

Popularity in Context

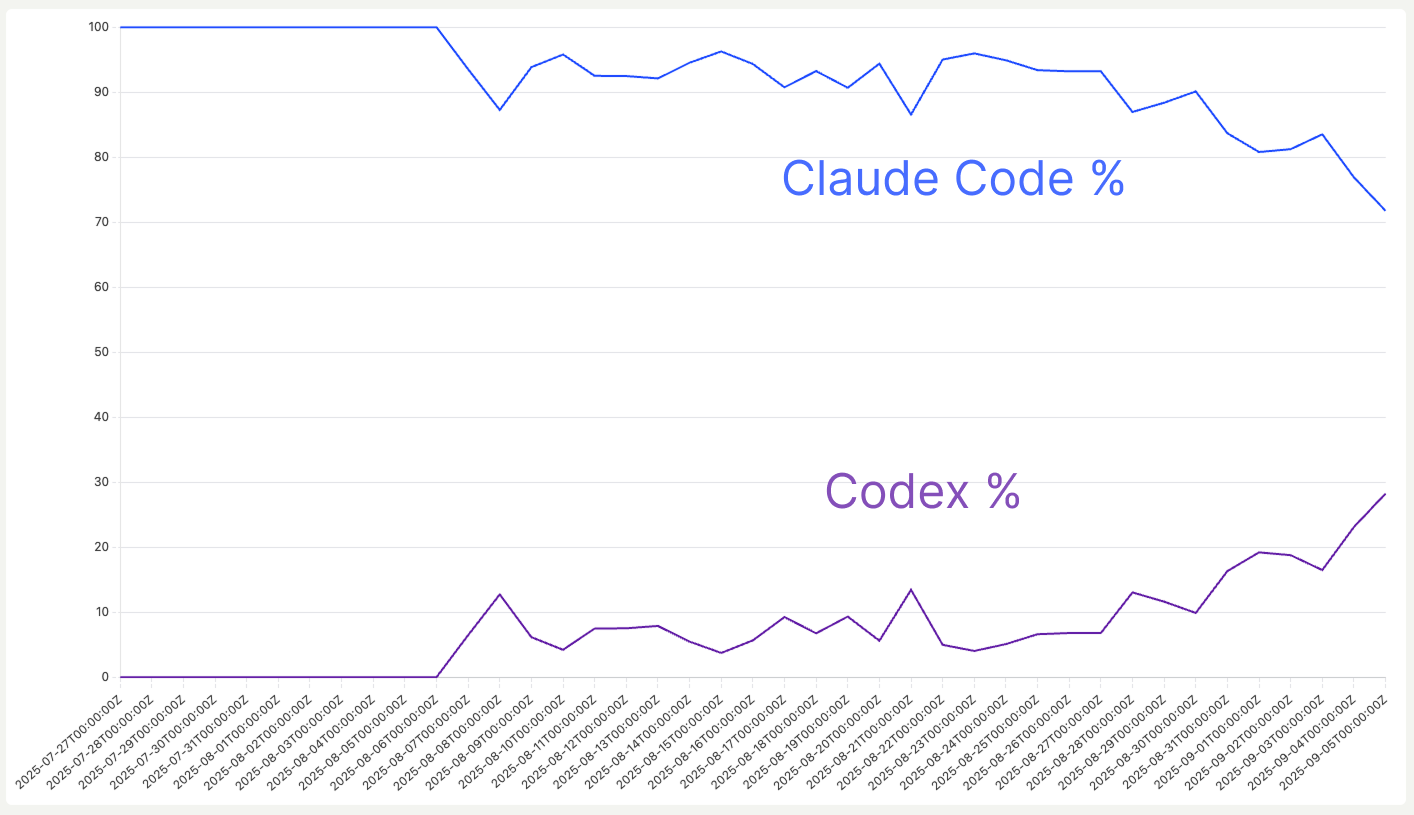

At Terragon Labs, where I help build an AI-powered platform that runs coding agents in parallel within remote cloud environments, we've seen firsthand how developers are adopting different agents. On Terragon, Codex usage has surged to 28% of all agent usage in just one month. The growth trajectory is clear:

Percentage of tasks created on Terragon using Claude Code vs Codex over time

Percentage of tasks created on Terragon using Claude Code vs Codex over time

Granted, our user base is not representative of the entire developer population and it skews towards early adopters, but I think this is a leading indicator of what will happen in the more general developer population.

What This Means

This marks the first time since the rise of vibe-coding coding model that a model has threatened Anthropic's throne.

- OpenAI is catching up fast. Claude Code with Opus 4 once dominated, but GPT‑5 and Codex have shifted the balance.

- Price drives adoption. When one agent costs significantly less with comparable quality, developers switch quickly.

- Anthropic's lead is vulnerable. Codex is gaining ground and other players are catching up. Kimi-K2 and Grok Code both have gotten levels of buzz not seen before.

What is Holding Codex Back

Despite its impressive growth, quality remains Codex's biggest challenge. The Claude Code team just gets it—they understand what's needed to build a tool developers use day in and day out. They scratch their own itch.

Claude Code is extensible and malleable. In the hands of an expert programmer, it can make you feel unstoppable. From custom slash commands that automate repetitive parts of your workflow, to hooks that make Claude check CI status before finishing a session, to seamless bash command execution without ever leaving the agent—these tiny details are what make it click.

Codex is incredibly spartan by comparison. While the actual coding performance is on par or sometimes better, the CLI lacks the small bits of craft that create that "just works" feeling. The core functionality is there, but the thoughtful touches that make daily usage delightful are missing.

This polish gap is what Codex needs to close to truly compete long-term. Raw capability brought them here, but sustained developer loyalty requires the kind of attention to detail that makes tools indispensable.

Wrapping Up

Since GPT‑5 launched, I've used Codex almost exclusively as my daily driver. On the ChatGPT Plus plan, I haven't hit a rate limit yet. I barely miss Claude Code.

The competitive landscape will keep evolving, but one thing is clear: Codex is no longer an alternative for me. It's becoming my go-to coding agent.